Microsoft® SQL Azure™ Database is a cloud-based relational database service built on SQL Server® technologies. As said by Microsoft SQL Azure should:

- Provide a highly available, scalable, multi-tenant database service hosted by Microsoft in the cloud. SQL Azure Database helps to ease provisioning and deployment of multiple databases.

- Developers do not have to install, setup, patch or manage any software.

- High availability and fault tolerance is built-in and no physical administration is required.

- SQL Azure Database supports Transact-SQL (T-SQL). Customers can use existing knowledge in T-SQL development and a familiar relational data model for symmetry with existing on-premises databases.

- SQL Azure Database can help reduce costs by integrating with existing toolsets and providing symmetry with on-premises and cloud databases.

These statements sounds very promising. Therefor I started creating a SQL Azure database in the cloud to see the current status of the cloud-based relational database service.

First of all you need a SQL Azure account.

Request an invitation code for yourself. Within one day you will receive the invation code which enables you to create your own SQL Azure Server. To create a server you need to specify and Administrator Username and an Administrator Password.

Now your server is created with one database: Master.

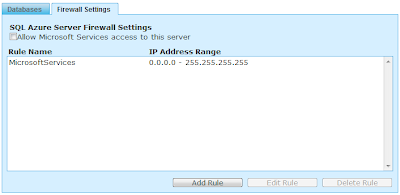

To access this server you need to configure the firewall settings. Press on the Firewall Settings tab and click on checkbox "Allow Microsoft Services acccess to this server". Select the Rule MicrosoftServices and press the Edit Rule button. Now you can specify the range of IP adresses which are allowed to connect to your Azure Server. In my example I have configured all IP addresses.

Now we will create the first database. Select the Databases tab. Press Create Database, Specify a database name and the size of the database. You can choose between 1 Gb and 10 Gb.

Press the Test Connectivity button to test the connection. You MUST have the MicrosoftServices Firewall rule enabled in order to use this feature. Your database is created and you are ready to connect with the SQL Server Management Studio (SSMS). For the Object Explorer you have enter the Server name and Login credentials.

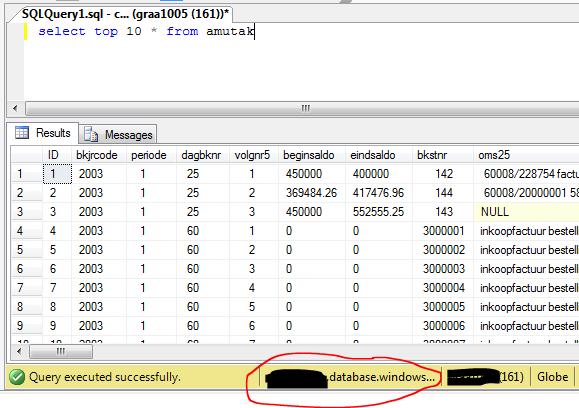

To open a new query you need to specify the database you want to connect. This canbe done in the connection propertie tab. The USE command is not supported for switching between databases. Establish a connection directly to the target database.

Now you are connected.

Enjoy using SQL Azure.