Tuesday, December 28, 2010

Why is my keyboard changing to another languages?

On my business laptop and home desktop machine, both installed with Windows 7 64 bits, I have 2 keyboard languages installed. I have no idea why. I have English and Dutch. I only uses English but sometimes it switches during typing of a letter to Dutch. This is very annoying. I had no idea why, but today I discovered that a short cut is responsible for it.

The short cut ALT+SHIFT. For my all Windows short cuts are nice if you are aware of it. I think that most poeple are not aware of all short cuts of Windows 7, which can result in a negative user experience. If you really need to have more than one keyboard layout, you can use the ALT+SHIFT combination to change it back. If you only need one keyboard language you can remove the other keyboard layouts via:

Start, Control Panel, Clock Language and Region, Change keyboard or other input methods.

Tab Keyboards and Languages, press Change keyboards.

Now you can remove all keyboards you do not want to use.

Happy typing without the big suprise of a changed keyboard language.

Labels:

changed,

keyboard layout,

language,

Windows 7

Thursday, December 16, 2010

What triggers the update of statistics in my SQL 2008 database, when are the statistics out of date ?

Microsoft SQL Server 2008 collects statistical information about indexes and column data stored in the database. These statistics are used by the SQL Server query optimizer to choose the most efficient plan for retrieving or updating data. By default, SQL Server 2008 also creates and updates statistics automatically, when such an operation is considered to be useful. Sometimes it can happen that your statistics are not representative for your current data distribution which can result in a not efficient query plan. SQL Server 2008 determines whether to update statistics based on changes to column modification counters (colmodctrs).

If the statistics is defined on a regular table, it is out of date if:

- The table size has gone from 0 to >0 rows.

- The number of rows in the table when the statistics were gathered was 500 or less, and the colmodctr of the leading column of the statistics object has changed by more than 500 since then.

- The table had more than 500 rows when the statistics were gathered, and the colmodctr of the leading column of the statistics object has changed by more than 500 + 20% of the number of rows in the table when the statistics were gathered.

- For filtered statistics, the colmodctr is first adjusted by the selectivity of the filter before these conditions are tested. For example, for filtered statistics with predicate selecting 50% of the rows, the colmodctr is multiplied by 0.5.

More detailed information about database statistics can be found in this whitepaper: Statistics used by the query optimizer in Microsoft SQL Server 2008.

Labels:

fullscan,

performance,

statistics,

update_statistics,

updated

Monday, December 13, 2010

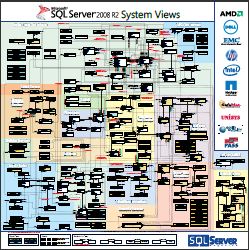

Microsoft SQL Server 2008 R2 System Views Maps.

The map is available in 2 formats:

- XPS

Labels:

Map,

SQL 2008 R2,

System Views

Wednesday, December 1, 2010

SQL Denali Codename Crescent what is it?

After the first sneak preview of Crescent during the keynote of Ted Kummert on SQLPASS we can talk about what Crescent is. Crescent is the code name of an ad-hoc reporting layer that will be released in the next version of SQL Server – Denali. Crescent is a major enhancement to SQL Server Reporting Services. Up to now, SQL Server Reporting Services (SSRS) didn't have a web-based report designer. Denali will change that by adding a brand new report authoring tool that will be powered by Silverlight. So, this will be the fifth report designer after BIDS, Report Builder 1.0 (not sure if RB 1.0 will survive SQL 11), Report Builder 3.0, Visual Studio Report Designer.

Besides bringing report authoring to the web, Crescent will redefine the report authoring experience and even what a report is. At this moment a Reporting Services report is static. Once you publish the report, the layout becomes fixed. If you want to make changes to the original design, such as adding new columns or switching from a tabular layout to a crosstab layout, you need to open the report in a report designer. Make your changes and republish the report. Crescent will change all of this and it will make the reporting experience more interactive and similar to Excel PivotTable. I have seen a preview of Crescent and indeed it really looks nice. It enables people in the boardroom to play with the data. The end user can quickly create an interactive report by dragging metadata, and then with a few mouse clicks change the report layout without switching to design mode. In fact, Crescent doesn't have a formal design mode.

I'm very enthousiastic about Crescent. I see a lot of opportunities. Based on information in static reports, decision makers wants to have more and or detailed information. With Crescent they can 'play' with the data and help themselves much better. There is only disappointing thing to mention: Crescent will only be available in SharePoint mode. For companies without an ICT department, deploying and maintaining of a Sharepoint server can be too complex. I think that the decision makers of these companies will have the same need to 'play' with the data.

Crescent is not available in Denali CTP 1, but I hope it will come available in CTP2. I'm looking forward to 'play' myself with the data.

Besides bringing report authoring to the web, Crescent will redefine the report authoring experience and even what a report is. At this moment a Reporting Services report is static. Once you publish the report, the layout becomes fixed. If you want to make changes to the original design, such as adding new columns or switching from a tabular layout to a crosstab layout, you need to open the report in a report designer. Make your changes and republish the report. Crescent will change all of this and it will make the reporting experience more interactive and similar to Excel PivotTable. I have seen a preview of Crescent and indeed it really looks nice. It enables people in the boardroom to play with the data. The end user can quickly create an interactive report by dragging metadata, and then with a few mouse clicks change the report layout without switching to design mode. In fact, Crescent doesn't have a formal design mode.

I'm very enthousiastic about Crescent. I see a lot of opportunities. Based on information in static reports, decision makers wants to have more and or detailed information. With Crescent they can 'play' with the data and help themselves much better. There is only disappointing thing to mention: Crescent will only be available in SharePoint mode. For companies without an ICT department, deploying and maintaining of a Sharepoint server can be too complex. I think that the decision makers of these companies will have the same need to 'play' with the data.

Crescent is not available in Denali CTP 1, but I hope it will come available in CTP2. I'm looking forward to 'play' myself with the data.

Labels:

crescent,

sql denali,

SSRS

Friday, November 26, 2010

Bad performance and lockings occur ad random on my database.

Within SQL server you can retrieve data via a query. To retrieve the data you need to specify the transaction isolation level. For read operations, transaction isolation levels primarily define the level of protection from the effects of modifications made by other transactions. A lower isolation level increases the ability of many users to access data at the same time but increases the number of concurrency effects (such as dirty reads or lost updates) users might encounter. Conversely, a higher isolation level reduces the types of concurrency anomalies that users may encounter but requires more system resources and increases the chances that one transaction will block another.

SQL server uses 4 levels to retrieve data:

In most situation a database is created by a specific applications. The isolation level of this application is leading for all other applications whio wants to connect to the same database. I will use Exact Globe and Exact Synergy as an example in this case.

Which levels of data retrieval is used by Exact Globe and Exact Synergy?

Within Exact Globe and Exact Synergy the runtime is configured to connect to the database with level 1. (Read Uncommitted). This means that read operations never will be blocked by others users who are also reading data with level 1 or by users who are modifying records. If somebody is reading data with level 2, they can block other users who are modifying or inserting records for the same table. In Exact Globe and Exact Synergy all transactions are stored in one table named GBKMUT. So it is very important to read all data with level 1 otherwise you will have a big chance that locks on this table will occur. Crystal Reports which are executed within the Exact Globe shell are always reading data with level 1. This also applies to the Exact Excel add-in.

Which levels of data retrieval are used by external applications to connect to a Exact Globe or Exact Synergy database?

External applications like Crystal Reports or Excel connects to the database with the default level 2. So it can happen that a big Crystal report will lock Globe 2003 users who are processing data. For instance an external Crystal Report on the orderliness tables (ORSRG) can lock a Globe user who is doing the fulfillment. Therefore it is very important to configure the level to retrieve data for external applications to level 1. This needs to be done per application. The most common applications I have seen are:

Start SSMS and select in the menu: Tools, Options

Select Query Execution, SQL Server, Advanced, SET TRANSACTION ISOLATION LEVEL)

Set it to READ UNCOMMITTED.

See also my blog about my favorite SSMS settings

How to configure the default isolation level to read uncommited for Crystal Reports ?

To use external Crystal Reports on a Globe 2003 or e-Synergy database it is important to use level 1 (Transaction isolation level READ UNCOMMITTED)

Visit the support web site of Crystal Reports and do a search on: 'isolation level' to find documents which explain how you can set the default isolation level for different Crystal Reports versions

How to configure the default isolation level to read uncommited for my customer made solution?

Contact your custom solution provider. More information about adjusting the isolation property can be found here.

How to detect the applications which connecs with the default isolation level read committed?

Use next query (as of SQL 2005 and higher). It will only show the applications which are running queries at that specific moment, you executed this query. Therefor it is usefull to execute this query on different moments of the day.

SELECT ss.Program_Name, Isolation_Level= Case Transaction_Isolation_Level WHEN '0' THEN 'Unspecified' WHEN '1' THEN 'Uncommitted' WHEN '2' THEN 'Committed' WHEN '3' THEN 'Repeatable' WHEN '4' THEN 'Serializable' WHEN '5' THEN 'Snapshot' END, sd.name AS DBName, spid, Host_Name, NT_User_Name, Memory_Usage * 8 Mem_KB, Reads, CPU_Time,ss.Login_Time, Last_Request_End_Time

FROM master.sys.dm_exec_sessions SS

INNER JOIN master..sysprocesses SP ON SP.spid=SS.session_id

INNER JOIN master..sysdatabases SD ON SD.dbid=SP.dbid

WHERE ss.session_id>50

AND Transaction_Isolation_Level <> 1

Enjoy, improving the overall performance of your database by avoiding unneccessary lockings.

SQL server uses 4 levels to retrieve data:

- Level 1: Read Uncommitted

- Level 2: Read Committed

- Level 3: Repeatable

- Level 4: Serializable

- Level 5: Snapshot (SQL 2005 Only)

In most situation a database is created by a specific applications. The isolation level of this application is leading for all other applications whio wants to connect to the same database. I will use Exact Globe and Exact Synergy as an example in this case.

Which levels of data retrieval is used by Exact Globe and Exact Synergy?

Within Exact Globe and Exact Synergy the runtime is configured to connect to the database with level 1. (Read Uncommitted). This means that read operations never will be blocked by others users who are also reading data with level 1 or by users who are modifying records. If somebody is reading data with level 2, they can block other users who are modifying or inserting records for the same table. In Exact Globe and Exact Synergy all transactions are stored in one table named GBKMUT. So it is very important to read all data with level 1 otherwise you will have a big chance that locks on this table will occur. Crystal Reports which are executed within the Exact Globe shell are always reading data with level 1. This also applies to the Exact Excel add-in.

Which levels of data retrieval are used by external applications to connect to a Exact Globe or Exact Synergy database?

External applications like Crystal Reports or Excel connects to the database with the default level 2. So it can happen that a big Crystal report will lock Globe 2003 users who are processing data. For instance an external Crystal Report on the orderliness tables (ORSRG) can lock a Globe user who is doing the fulfillment. Therefore it is very important to configure the level to retrieve data for external applications to level 1. This needs to be done per application. The most common applications I have seen are:

- SQL Server Management Studio, mostly the DBA administrator itself.

- Crystal Reports.

- Custom made solutions.

Start SSMS and select in the menu: Tools, Options

Select Query Execution, SQL Server, Advanced, SET TRANSACTION ISOLATION LEVEL)

Set it to READ UNCOMMITTED.

See also my blog about my favorite SSMS settings

How to configure the default isolation level to read uncommited for Crystal Reports ?

To use external Crystal Reports on a Globe 2003 or e-Synergy database it is important to use level 1 (Transaction isolation level READ UNCOMMITTED)

Visit the support web site of Crystal Reports and do a search on: 'isolation level' to find documents which explain how you can set the default isolation level for different Crystal Reports versions

How to configure the default isolation level to read uncommited for my customer made solution?

Contact your custom solution provider. More information about adjusting the isolation property can be found here.

How to detect the applications which connecs with the default isolation level read committed?

Use next query (as of SQL 2005 and higher). It will only show the applications which are running queries at that specific moment, you executed this query. Therefor it is usefull to execute this query on different moments of the day.

SELECT ss.Program_Name, Isolation_Level= Case Transaction_Isolation_Level WHEN '0' THEN 'Unspecified' WHEN '1' THEN 'Uncommitted' WHEN '2' THEN 'Committed' WHEN '3' THEN 'Repeatable' WHEN '4' THEN 'Serializable' WHEN '5' THEN 'Snapshot' END, sd.name AS DBName, spid, Host_Name, NT_User_Name, Memory_Usage * 8 Mem_KB, Reads, CPU_Time,ss.Login_Time, Last_Request_End_Time

FROM master.sys.dm_exec_sessions SS

INNER JOIN master..sysprocesses SP ON SP.spid=SS.session_id

INNER JOIN master..sysdatabases SD ON SD.dbid=SP.dbid

WHERE ss.session_id>50

AND Transaction_Isolation_Level <> 1

Enjoy, improving the overall performance of your database by avoiding unneccessary lockings.

Thursday, November 11, 2010

SQL Denali CTP available for download

The first CTP of the successor of SQL Server 2008 R2, codename SQL 'Denali' is available for download. It contains a lot new stuff. Keep in mind SQL server isn't just a database, it is an entire information platform by making use of SharePoint server. The need for a SharePoint installation can be a challenge for the companies with do not have or a very small ICT department.

Here is a short list of new high level topics:

My personal favorites are:

If you are interested to look around in the CTP version, you can download it here

Enjoy testing this new version.

Here is a short list of new high level topics:

- Project codename “Crescent”, a web-based, data visualization and presentation solution, and follow-on to the PowerPivot technology that is part of SQL Server 2008 R2

- Project codename “Apollo”, new column-store database technology aiming to provide greater query performance

- SQL Server AlwaysOn, a new high-availability “solution that will deliver “increased application availability, lower TCO (total cost of ownership) and ease of use

- Project codename “Juneau”, a single development environment for developing database, business intelligence (BI) and web solutions

- SQL Server Data Quality Services (based on technology from Microsoft’s 2008 Zoomix acquisition)

- Other data integration and management tools

My personal favorites are:

- Project codename 'Crescent'. This will help the people in the board room to easily analyze and play with the data they are looking at.

- Project codename 'Apollo'. Performance is one of my specialties in SQL Server. For every new SQL version I look forward to the improvements on performance. This new column-store database technology will indeed improve the performance of your datawarehouse significantly.

If you are interested to look around in the CTP version, you can download it here

Enjoy testing this new version.

Labels:

CTP,

Denali,

download,

SQL 2008 R2,

SQL Server

Tuesday, November 9, 2010

Announcement of SQL Azure Reporting CTP

Microsoft has announced SQL Azure Reporting service. Microsoft SQL Azure Reporting lets you use the familiar on-premises tools you’re comfortable with to develop and deploy operational reports to the cloud. There’s no need to manage or maintain a separate reporting infrastructure, which leads to the added benefit of lower costs (and less complexity). Your customers can easily access the reports from the Windows SQL Azure portal, through a web browser, or directly from your applications. SQL Azure Reporting enables developers to enhance their applications by embedding cloud based reports on information stored in a SQL Azure database. By using the SQL Data Sync CTP, you can upload data from your on premise database to a SQL Azure database. This SQL Azure database can be used by SQL Azure Reporting CTP.

You create your reports in the same way as your are doing it for an on premise SQL Reporting Server. The only difference is that you deploy your reports to the SQL Azure Reporting services.

Take a look at next video about SQL Azure Reporting services.

If you are interested sign up here for the SQL Azure Reporting CTP.

More detailed information about SQL Azure Reporting see:

http://www.microsoft.com/en-us/sqlazure/reporting.aspx

Enjoy creating your dashboards in the cloud.

You create your reports in the same way as your are doing it for an on premise SQL Reporting Server. The only difference is that you deploy your reports to the SQL Azure Reporting services.

Take a look at next video about SQL Azure Reporting services.

If you are interested sign up here for the SQL Azure Reporting CTP.

More detailed information about SQL Azure Reporting see:

http://www.microsoft.com/en-us/sqlazure/reporting.aspx

Enjoy creating your dashboards in the cloud.

Labels:

CTP,

SQL Azure Reporting CTP,

SQL Data Sync

Wednesday, November 3, 2010

Whitepaper: Inside in SQL Azure

Kalen Delaney has written a good whitepaper about the inside in SQL Azure. This is a must read for people who are Corporate decision makers, SQL Server database developers and DBA's. A lot is already writting about SQL Azure however this document is a good overview

SQL Azure Database is Microsoft’s cloud-based relational database service. Cloud computing refers to the applications delivered as services over the Internet and includes the systems, both hardware and software, providing those services from centralized data centers. This introductory section will present basic information about what SQL Azure is and what it is not, define general terminology for use in describing SQL Azure databases and applications, and provide an overview of the rest of the paper

Next topics are discussed:

SQL Azure Database is Microsoft’s cloud-based relational database service. Cloud computing refers to the applications delivered as services over the Internet and includes the systems, both hardware and software, providing those services from centralized data centers. This introductory section will present basic information about what SQL Azure is and what it is not, define general terminology for use in describing SQL Azure databases and applications, and provide an overview of the rest of the paper

Next topics are discussed:

- What is SQL Azure?

- What is in this whitepaper

- What is NOT in this whitepaper

- Terminology

- Target Audience

- Prerequisites

- Setting up SQL Azure

- Subscriptions

- Databases

- Security

- Compatibility with SQL Server

- SQL Azure Architecture Overview

- Logical Databases on a SQL Azure Server

- Network topology

- Services Layer

- Platform Layer

- High Availability with SQL Azure

- Scalability with SQL Azure

- Throttling

- Load Balancer

- SQL Azure Development Considerations

- SQL Azure Management

- Future Plans for SQL Azure

- Conclusion

Labels:

architecture,

high availability,

scalability,

SQL Azure,

whitepaper

Friday, October 29, 2010

Which Fillfactor should I use to improve performance?

The performance of your SQL Server database will depend on multiple factors. One of these factors is index fragmentation. Fragmentation of indexes will slow down performance because more page should be read. This blog post will talk about how some index fragmentation can be avoided by using the fill factor option in the index definition. By default all index information is stored in pages. During the creation of the index the storage engine will make the index leaf level pages 100% full. In other words leaving no space. This means that a random insert, or a record that increases in size, will result in a page splitt. Now fragmentation of your index is started. Random inserts will occur if the first column of an index will have random values for instance an GUID. The more page splitts occur on your index, the more fragmentation you have on this index. Page splitts can be avoided if there is enough space in the page to insert the new index records. By using the fill factor option in the configuration of your index, the storage engine will leave some free space in the leaf level pages during index build or rebuild operations. A fill factor of 90 means that 90 % of the index pages will be used and 10% is free index space.

The fill factor to use depends on the percentage of random inserts, or records that increase in size in the periode between 2 index defragmentations periods. For instance if you defrag your indexes every week, you need to know the percentage of index insert during a week.

First of all I will use a script to show the index fragmentation. I will create a table and a stored procedure to insert records and I will create 4 indexes with a GUID as the first column to generate random inserts.

-- Create a test table

CREATE TABLE FillFactorTest

(Division INT,

HID INT Identity(1,1) NOT NULL,

TestINT INT NULL,

TestGuid [uniqueidentifier] ROWGUIDCOL NOT NULL,

TestChar CHAR(760) NULL)

GO

CREATE CLUSTERED INDEX PK_FillfactorTest ON FillfactorTest(Division, HID)

GO

Now we have a test table: FillFactorTest which need to get data. I will use a stored procedure for this. This stored procedure can be used to add data in batches.

CREATE PROC [dbo].[FillFactorinsert](@Division INT, @NumberInserts INT) AS

DECLARE @COUNTER INT

DECLARE @TestINT INT

SET @Division = 100

SET @TestINT = 2

SET @COUNTER = 1

INSERT INTO FillFactorTest (Division,TestINT,TestGuid,TestChar)

Values (@Division,@TestINT,NEWID(),'Pipo')

WHILE @COUNTER < @NumberInserts

BEGIN

INSERT INTO FillFactorTest (Division,TestINT,TestGuid,TestChar)

Values (@Division,@TestINT,NEWID(),'Pipo')

SET @TestINT = @TestINT +1

SET @COUNTER = @COUNTER + 1

END

GO

-- Now it is time to insert records

-- Insert first set of 10000 records for Division 100

EXEC FillFactorinsert 100,10000

GO

-- Insert first set of 2500 records for Division 200

EXEC FillFactorinsert 200,2500

GO

-- Insert first set of 7000 records for Division 300

EXEC FillFactorinsert 300,7500

GO

-- Now we have added 20.000 records to the table FillFactorTest

SELECT COUNT(*) FROM FillFactorTest

-- Now it is time to add indexes. We will add 4 indexes on which only the fillfactor is different.

-- Create 4 times the same index on only guid only fillfactor is different

CREATE INDEX TestEntryGUID ON FillfactorTest(Testguid)

CREATE INDEX TestEntryGUIDFILL98 ON FillfactorTest(Testguid) WITH (FillFactor = 98)

CREATE INDEX TestEntryGUIDFILL95 ON FillfactorTest(Testguid) WITH (FillFactor = 95)

CREATE INDEX TestEntryGUIDFILL90 ON FillfactorTest(Testguid) WITH (FillFactor = 90)

-- Use next query to retrieve the fragmentation of these indexes.

-- Defragmentation percentage of indexes in a table

SELECT index_name AS IndexName,MAX(Fill_factor) AS Fill_factor

,SUM(avg_fragmentation_in_percent) AS Fragmentation_Percent

FROM (

SELECT

SI.[name] AS index_name

, SDDIPS.[avg_fragmentation_in_percent]

, NULL as Fill_factor

FROM sys.[dm_db_index_physical_stats](DB_ID(),OBJECT_ID('FillfactorTest') , NULL, NULL, 'Detailed') SDDIPS

INNER JOIN sys.[indexes] SI

ON SDDIPS.[object_id] = SI.[object_id] AND SDDIPS.[index_id] = SI.[index_id]

WHERE SDDIPS.[index_id] > 1

UNION ALL

SELECT COALESCE(i.name, 'N/A') as index_name

,NULL AS avg_fragmentation_in_percent,i.fill_factor AS Fill_Factor

FROM sys.tables t

INNER JOIN sys.indexes i ON t.object_id = i.object_id

LEFT OUTER JOIN sys.dm_db_index_usage_stats ius

ON i.object_id = ius.object_id AND i.index_id = ius.index_id AND ius.database_id = db_id()

WHERE t.object_id = OBJECT_ID('FillfactorTest') AND i.type = 2

) XX

GROUP BY index_name

You wil see that all indexes do not have any fragmentation.

Now it is time to insert additional records. Let's see when fragmentation occurs. We will do it in steps of 1 %.

Step 1:

-- Insert 1% new records.

EXEC FillFactorinsert 100,100

GO

EXEC FillFactorinsert 200,25

GO

EXEC FillFactorinsert 300,75

GO

SELECT COUNT(*) FROM FillFactorTest

GO

Step 2:

Re run the defragmentation script. You will see that the index with the default fill factor is full fragmented. (More than 95%)

Step3: repeat Step 1 and Step 2 for 4 times.

During these steps you will see when fragmentation start occuring on the different indexes. The results of these inserts are reflected in next graph.

Conclusion: Fragmentation (> 20%) will occur if 60% of the free space created by the fillfactor is used. This means that if you insert 5% new data you need a fill factor of at least (5/60)*100 = 8,3 Rounded to 10

Attention point: Using a fill factor will improve performance because it will avoid fragmentation. However you need to configure a defragmentation job to avoid fragmentation in the future. This means that if you insert 5 % new records per month, the defragmentation job should be scheduled once per month.

Thursday, October 21, 2010

Exact System Information improvement report extended with filtered index suggestions.

As of today the Exact System Information improvement report is extended with the possibility to implement filtered indexes for Globe databases on a server with SQL Server 2008 or SQL 2008 R2. See next screen shot from an improvement report.

As of SQL Server 2008 filtered indexes are introduced. A filtered index allows us to create an index with a filter on a subset of rows within a table. A filtered index will:

After implementing filtered indexes on some customer databases, we have seen reduction of the index size between 25% to 30%. This will results in an overall database size reduction of around 10 %. The actual reduction of the index size in your database depends on the number of NULL values in your database. Please DO NOT shrink your database after implementing the filtered indexes. Shrinking databases and explanding database can result in defragmentation of the database files on NTFS level. SQL Server will first use the free space in the database before it will growth.

Performance tests indicated no noticeable performance increase or decrease with read actions, however write performance is gained due to the fact less index information needs to be written. After implementing filtered indexes, the fragmentation of your indexes will be lower. This is good for the overall performance of your Exact solution.

To begin you need to start the Exact System Information tool and request an improvement report. The user who start the Exact System Information tool should have a SQL System Administrator role (SA). If you can implement filtered indexes, it will be mentioned in the improvement report. In the improvement report you will find a link to the script to implement filtered indexes.

We strongly advise you to run the Exact System Information on a regular basis. For instance once per 3 months. We are adding on a regular basis new suggestions to the improvement report to improve your Exact solution. If you have any feedback, please let us know. You can comment on this blog post or send an email to Andre@exact.com

This blog is also published on the Exact Product Blog.

As of SQL Server 2008 filtered indexes are introduced. A filtered index allows us to create an index with a filter on a subset of rows within a table. A filtered index will:

- Improve query performance. Statistics are more accurate which can result in better query plans.

- Reduce index maintenance costs. An index is only maintained when the data in the index is changed.

- Reduce index storage costs.

After implementing filtered indexes on some customer databases, we have seen reduction of the index size between 25% to 30%. This will results in an overall database size reduction of around 10 %. The actual reduction of the index size in your database depends on the number of NULL values in your database. Please DO NOT shrink your database after implementing the filtered indexes. Shrinking databases and explanding database can result in defragmentation of the database files on NTFS level. SQL Server will first use the free space in the database before it will growth.

Performance tests indicated no noticeable performance increase or decrease with read actions, however write performance is gained due to the fact less index information needs to be written. After implementing filtered indexes, the fragmentation of your indexes will be lower. This is good for the overall performance of your Exact solution.

To begin you need to start the Exact System Information tool and request an improvement report. The user who start the Exact System Information tool should have a SQL System Administrator role (SA). If you can implement filtered indexes, it will be mentioned in the improvement report. In the improvement report you will find a link to the script to implement filtered indexes.

We strongly advise you to run the Exact System Information on a regular basis. For instance once per 3 months. We are adding on a regular basis new suggestions to the improvement report to improve your Exact solution. If you have any feedback, please let us know. You can comment on this blog post or send an email to Andre@exact.com

This blog is also published on the Exact Product Blog.

Wednesday, October 6, 2010

Combine SQL Profiler with Performance monitor logs

To analyze the performance of your applications which is running on SQL Server you need to make use of 2 standard tools:

What do you need to do:

Enjoy using the performance monitor with SQL Profiler.

- SQL Profiler

- Windows Performance Monitor. (Perfmon)

What do you need to do:

- Make a SQL Profile trace of your application.

- Make a performance log file with Performance monitor (Perfmon) of your application via a data collector set. Save the result to a file.

- Execute some load in your application.

- Stop the SQL profiler trace file and store it as a trace file.

- Load the trace file in SQL Profiler.

- In the menu of SQL Profiler Select File, Import Performance Data and select your performance log file.

- Select the counters you want to see in the SQL profiler.

Enjoy using the performance monitor with SQL Profiler.

Monday, October 4, 2010

My filtered index is not used: The impact of forced parameterization.

As of SQL Server 2008 filtered indexes can be used. This can save you a lot of index space and will improve performance during index updates and will result in less index defragmentation. In case your database is configured with forced parameterization, it can happen that your filtered index is not used. For instance you have an filtered index like:

CREATE INDEX ZipcodeFiltered on Zipcodes(Zipcode) WHERE Zipcode = '3000'

Next query is executed

SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = '3000'

Looking to the query plan the ZipcodeFiltered index is not used. This happens if your database is configured for Forced Parameterization.

To explain this behaviour I will create a repro sample:

CREATE DATABASE FilterTest

GO

USE [FilterTest]

GO

CREATE TABLE Zipcodes

(ID INT,

Zipcode NVARCHAR(20),

City NVARCHAR (100),

Extra NVARCHAR (100))

GO

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3000','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3001','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3002','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3003','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3004','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3005','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3006','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3000','Rotterdam','TestFilter')

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3001','Rotterdam','TestFilter')

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3002','Rotterdam','TestFilter')

-- Create regular indexes and filtered version

GO

CREATE CLUSTERED INDEX Clus_zipcodes ON Zipcodes(ID)

CREATE INDEX Zipcode ON Zipcodes(Zipcode)

-- Create same index but now with filter

CREATE INDEX ZipcodeFiltered ON Zipcodes(Zipcode)

WHERE Zipcode = '3000'

CREATE INDEX Extra ON Zipcodes(Extra)

-- Create same index but now with filter

CREATE INDEX ExtraFiltered ON Zipcodes(Extra)

WHERE EXTRA IS NOT NULL

-- Database Not using forced parameterization

ALTER DATABASE FilterTest SET PARAMETERIZATION SIMPLE;

SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = '3000'

-- Look in the queryplan: Index ZipcodeFiltered is used

SELECT Extra,ID FROM Zipcodes WHERE EXTRA = 'TestFilter'

-- Look in the queryplan: Index ExtraFiltered is used

ALTER DATABASE FilterTest SET PARAMETERIZATION FORCED;

SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = '3000'

-- Look in the queryplan: Index Zipcode is used. So not the ZipcodeFiltered

SELECT Extra,ID FROM Zipcodes WHERE EXTRA = 'TestFilter'

-- Look in the queryplan: Index ExtraFiltered is still used. Even with Forced Parameterization

DROP . DATABASE FilterTest

Why is the filtered index not used with forced parameterization?

With forced parameterization the SQL Server engine will auto parameterize your query. This means that next query:

SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = '3000'

will be stored in the procedure cache as:

SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = @0

The query plan for this query: SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = @0

will be using the 'regular' index without the filter because for the value @0 the query optimizer does not know if it is '3000' or another value.

As you have seen in the example this behaviour does not apply for filtered indexes with an WHERE COLUMN_X IS NOT NULL.

Conclusion:

You can use filtered indexes with Forced Parameterization if your filtered indexes are using a WHERE Clause like COLUMN_X IS NOT NULL.

Special thanks to my colleague Ad van der Hoeven for bringing this to my attention.

Enjoy the use of filtered indexes

CREATE INDEX ZipcodeFiltered on Zipcodes(Zipcode) WHERE Zipcode = '3000'

Next query is executed

SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = '3000'

Looking to the query plan the ZipcodeFiltered index is not used. This happens if your database is configured for Forced Parameterization.

To explain this behaviour I will create a repro sample:

CREATE DATABASE FilterTest

GO

USE [FilterTest]

GO

CREATE TABLE Zipcodes

(ID INT,

Zipcode NVARCHAR(20),

City NVARCHAR (100),

Extra NVARCHAR (100))

GO

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3000','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3001','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3002','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3003','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3004','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3005','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3006','Rotterdam',NULL)

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3000','Rotterdam','TestFilter')

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3001','Rotterdam','TestFilter')

INSERT INTO Zipcodes (ID,Zipcode,City,Extra) VALUES (1,'3002','Rotterdam','TestFilter')

-- Create regular indexes and filtered version

GO

CREATE CLUSTERED INDEX Clus_zipcodes ON Zipcodes(ID)

CREATE INDEX Zipcode ON Zipcodes(Zipcode)

-- Create same index but now with filter

CREATE INDEX ZipcodeFiltered ON Zipcodes(Zipcode)

WHERE Zipcode = '3000'

CREATE INDEX Extra ON Zipcodes(Extra)

-- Create same index but now with filter

CREATE INDEX ExtraFiltered ON Zipcodes(Extra)

WHERE EXTRA IS NOT NULL

-- Database Not using forced parameterization

ALTER DATABASE FilterTest SET PARAMETERIZATION SIMPLE;

SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = '3000'

-- Look in the queryplan: Index ZipcodeFiltered is used

SELECT Extra,ID FROM Zipcodes WHERE EXTRA = 'TestFilter'

-- Look in the queryplan: Index ExtraFiltered is used

ALTER DATABASE FilterTest SET PARAMETERIZATION FORCED;

SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = '3000'

-- Look in the queryplan: Index Zipcode is used. So not the ZipcodeFiltered

SELECT Extra,ID FROM Zipcodes WHERE EXTRA = 'TestFilter'

-- Look in the queryplan: Index ExtraFiltered is still used. Even with Forced Parameterization

DROP . DATABASE FilterTest

Why is the filtered index not used with forced parameterization?

With forced parameterization the SQL Server engine will auto parameterize your query. This means that next query:

SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = '3000'

will be stored in the procedure cache as:

SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = @0

The query plan for this query: SELECT ID,Zipcode FROM Zipcodes WHERE Zipcode = @0

will be using the 'regular' index without the filter because for the value @0 the query optimizer does not know if it is '3000' or another value.

As you have seen in the example this behaviour does not apply for filtered indexes with an WHERE COLUMN_X IS NOT NULL.

Conclusion:

You can use filtered indexes with Forced Parameterization if your filtered indexes are using a WHERE Clause like COLUMN_X IS NOT NULL.

Special thanks to my colleague Ad van der Hoeven for bringing this to my attention.

Enjoy the use of filtered indexes

Labels:

filtered index,

forced parameterization,

not used,

queryplan

Sunday, October 3, 2010

SQL Profiler is on my second screen, but my second screen is not connected.

I use a lot of time multiple screens on my laptop. With SQL Profiler this can give some challenges. I will explain in which situation.

- Connect an extra monitor to your laptop.

- Extend this screen as the second screen.

- Start SQL Profiler and move it to the second screen.

- Close SQL profiler on the second screen.

- Disconnect the second screen.

- Start SQL Profiler.

- Click on the SQL Profiler window in the taskbar.

- Press ALT ENTER to get the propertie window of the SQL Profiler.

- Select MOVE.

- Use you arrow keys to move the invisible window back to your screen.

Labels:

hidden,

second screen,

SQL Profiler,

Windows

Wednesday, September 29, 2010

Get the maximum out of your Exact solution: Self service in one click.

In the past I blogged about the Exact System Information tool. With this tool you can optimize your Exact solution in one click. Please have a look to the embedded PowerPoint Web app presentation to see what the Exact System Information can do for you as an Exact user.

If you are unable to see the presentation you can see it here

Enjoy it!

If you are unable to see the presentation you can see it here

Enjoy it!

Tuesday, September 28, 2010

Support policy for SQL Server 2000 on newer Operating Systems

This blog post applies to customers who uses Microsoft SQL 2000 as database server. In the past Exact Globe started with Microsoft SQL Server 2000 as database server. As of today, still a lot of our customers uses Microsoft SQL Server 2000 as database server. On 14 September Microsoft announced the support policy for the newer operating systems. This means Microsoft SQL Server 2000 is not supported on the latest operatings systems like:

- Windows Vista (all editions)

- Windows Server 2008 (all editions)

- Windows Server 2008 R2 (all editions)

- Windows 7 (all editions)

In case you want to use Windows Server 2008 or Windows Server 2008 R2, as operating system for your new server, you need to upgrade your SQL Server version. Please contact your Exact reseller for an SQL Server license with a big discount in case you are using the SQL server only for Exact databases. As of release 398 Exact Globe supports Microsoft SQL Server 2008 R2.

Enjoy your upgrade to SQL Server 2008 R2

Labels:

Exact Globe,

MS,

SQL 2000,

SQL Server 2008 Upgrade 2005

Tuesday, September 21, 2010

How to: Hide a SSRS report item like tablix, table, charts etc. if no data is found.

In your SQL Server Reporting Server (SSRS) reports you can add multiple report items. In some situations it can happen that no data is available for one of the data sets which are linked to these report items. Displaying empty report items can be confusing for the user. It is possible to display report items only if data is available to display. This can be done by defining an expression for the visibility of the report item. The result of this expression is the trigger to show or hide the report item. To configure the show or hide of a report item, you need to do the following:

- Open the properties of the report item

- Select visibility

- Set when the report is initially run: Show or hide based on an expression.

- Press the fx button to define the expression

- Next example is an expression which will not display the tablix if the columns Hostname of dataset 'IndexImprovements' returns 0 records.

=IIf(Count(Fields!hostname.Value, "IndexImprovements")=0,True,False)

Enjoy building your reports.

Picture credit: André Speek

Labels:

display,

empty,

no data,

SQL 2008,

SQL 2008 R2,

SSRS,

visibility

Monday, September 6, 2010

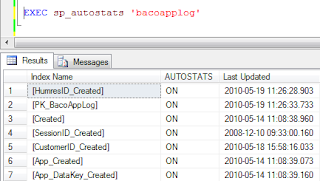

Slow performance because of outdated index statistics.

Index statistics are the basis for the SQL Server database engine to generate the most efficient query plan. By default statistics are updated automaticly. The query optimizer determines when statistics might be out-of-date and then updates them when they are used by a query. Statistics become out-of-date after insert, update, delete, or merge operations change the data distribution in the table or indexed view. The query optimizer determines when statistics might be out-of-date by counting the number of data modifications since the last statistics update and comparing the number of modifications to a threshold. The threshold is based on the number of rows in the table or indexed view. In the situation you encounter a performance problem and you do not understand the generated execution plan you can doubt if the statistics are up to date. Now you can do 2 things. First directly execute an update statistics on the table, but much better check how recent your statistics are. In case they are very recent, it is not necessary to execute an update statistics.

First check if indexes are disabled for auto update statistcs (No_recompute = 0). Next query will retrieve all indexes for which auto update statistics are disabled:

SELECT o.name AS [Table], i.name AS [Index Name],

STATS_DATE(i.object_id, i.index_id) AS [Update Statistics date],

s.auto_created AS [Created by QueryProcessor], s.no_recompute AS [Disabled Auto Update Statistics],

s.user_created AS [Created by user]

FROM sys.objects AS o WITH (NOLOCK)

INNER JOIN sys.indexes AS i WITH (NOLOCK) ON o.object_id = i.object_id

INNER JOIN sys.stats AS s WITH (NOLOCK) ON i.object_id = s.object_id AND i.index_id = s.stats_id

WHERE o.[type] = 'U'

AND no_recompute = 1

ORDER BY STATS_DATE(i.object_id, i.index_id) ASC;

Use next query to enable the Auto Update Statistics for IndexA of TableX.

ALTER INDEX

ON dbo.

SET (STATISTICS_NORECOMPUTE = OFF);

Use next query to see the update statistics date.

SELECT o.name AS [Table], i.name AS [Index Name],

STATS_DATE(i.object_id, i.index_id) AS [Update Statistics date],

s.auto_created AS [Created by QueryProcessor], s.no_recompute AS [Disabled Auto Update Statistics],

s.user_created AS [Created by user]

FROM sys.objects AS o WITH (NOLOCK)

INNER JOIN sys.indexes AS i WITH (NOLOCK) ON o.object_id = i.object_id

INNER JOIN sys.stats AS s WITH (NOLOCK) ON i.object_id = s.object_id AND i.index_id = s.stats_id

WHERE o.[type] = 'U'

ORDER BY STATS_DATE(i.object_id, i.index_id) ASC;

In case your indexes are outdated use next query to update the statistics:

UPDATE STATISTICS TableX IndexA

Enjoy your performance tuning.

First check if indexes are disabled for auto update statistcs (No_recompute = 0). Next query will retrieve all indexes for which auto update statistics are disabled:

SELECT o.name AS [Table], i.name AS [Index Name],

STATS_DATE(i.object_id, i.index_id) AS [Update Statistics date],

s.auto_created AS [Created by QueryProcessor], s.no_recompute AS [Disabled Auto Update Statistics],

s.user_created AS [Created by user]

FROM sys.objects AS o WITH (NOLOCK)

INNER JOIN sys.indexes AS i WITH (NOLOCK) ON o.object_id = i.object_id

INNER JOIN sys.stats AS s WITH (NOLOCK) ON i.object_id = s.object_id AND i.index_id = s.stats_id

WHERE o.[type] = 'U'

AND no_recompute = 1

ORDER BY STATS_DATE(i.object_id, i.index_id) ASC;

Use next query to enable the Auto Update Statistics for IndexA of TableX.

ALTER INDEX

ON dbo.

SET (STATISTICS_NORECOMPUTE = OFF);

Use next query to see the update statistics date.

SELECT o.name AS [Table], i.name AS [Index Name],

STATS_DATE(i.object_id, i.index_id) AS [Update Statistics date],

s.auto_created AS [Created by QueryProcessor], s.no_recompute AS [Disabled Auto Update Statistics],

s.user_created AS [Created by user]

FROM sys.objects AS o WITH (NOLOCK)

INNER JOIN sys.indexes AS i WITH (NOLOCK) ON o.object_id = i.object_id

INNER JOIN sys.stats AS s WITH (NOLOCK) ON i.object_id = s.object_id AND i.index_id = s.stats_id

WHERE o.[type] = 'U'

ORDER BY STATS_DATE(i.object_id, i.index_id) ASC;

In case your indexes are outdated use next query to update the statistics:

UPDATE STATISTICS TableX IndexA

Enjoy your performance tuning.

Sunday, August 29, 2010

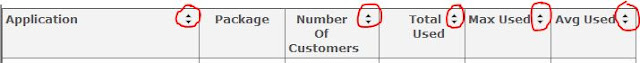

How to enable and configure interactive (dynamic) sorting on your SSRS reports.

In reporting server you can build reports which are sorted in a specific way. However sometimes it depends on the usage of the report on which column a report should be sorted. User A want to sort it on column X and user B wants to sort it on column Y. To configure dynamic sorting on your SSRS reports you need to do the following:

- Open your SSRS report.

- Select the header of the column on which you want to enable interactive sorting.

- Open the properties of the Text properties window.

- Tick enable interactive sorting on this text box.

- Select the columns of the dataset you want to sort on.

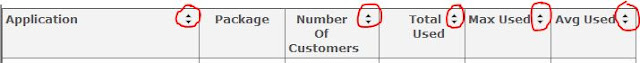

- Repeat previous steps for all columns on which you want enable interactive sorting.See the red circles for which columns interactive sorting is enabled.

Enjoy building nice reports.

Labels:

columns,

dynamic sorting,

interactive sorting,

SQL 2008,

SQL 2008 R2,

SSRS

Thursday, August 26, 2010

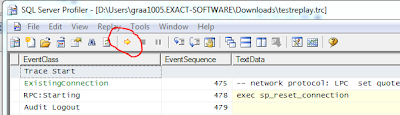

Use SQL profiler replay traces to benchmark performance of your SQL server database.

In SQL Server 2008 (R2) a lot of new features are build to improve the overall performance of your database. These features are mostly independent of your application. To implement these new features, you want to know what the impact is of an implemented feature. This blogpost will explain how SQL Profiler can help you to simulate 'production' workload on your database.

Some examples of these new features:

- Filtered indexes

- Data compression

- Optimize for ad-hoc workloads.

- Partitioned tables in combination with data compression

In general the replay process can divided into:

- Make a full backup of the production database.

- Capture workload with SQL Profiler.

- Restore production database on a test server.

- Replay workload to create baseline. Use SQL profiler to measure CPU and IO

- Restore production database and configure some of the new features.

- Replay workload. Use SQL Profiler to measure CPU and IO.

- Compare the results with the baseline.

Step 1: Make a full backup of your production database.

Step 2: Capture workload with SQL profiler

- Start SQL Profiler with the trace template 'TSQL_Replay' and a filter on the database id of your production database.

- Save the trace results to a new database on another SQL server as your production server.

- Select a database in which you want to store your replytraces and define a table name. In this example I have created a Database: ReplayTraces.

- Define a filter for the database of your production database. Use next query to retrieve the database ID of your production database:

SELECT Dbid FROM Master..SYSDatabases WHERE Name = '' - Start the profiler when the FULL BACKUP process is almost completed. Starting the replay just before the full backup is completed garantees that you have all queries which are executed after the full backup is completed.

- The profiler will now capture all executed queries on your production database.

- Stop the SQL Profiler trace at the moment you have captured enough data which can be representative for your tests.

Now we have a backup of the production database and a database with the captured workload. Be sure to have backups of these 2 database because you will need them a lot of times for your tests.

Restore the backup of your production database on your test server.

Step 4: Replay workload to create baseline. Use SQL profiler to measure CPU and IO

For a benchmark we need to have a baseline. To create a baseline execute next steps:

- Load the captured profile data in the SQL Profiler.

- Open SQL profiler and select File, Open, Trace Table.

- Select the SQL Server,Database and tablename in which you have captured the production workload.

To replay a trace against a server (the target) on which SQL Server is running other than the server originally traced (the source), make sure the following has been done:

- All logins and users contained in the trace must be created already on the target and in the same database as the source.

- All logins and users in the target must have the same permissions they had in the source.

- All login passwords must be the same as those of the user that executes the replay.

- The database IDs on the target should be the same as those on the source. If they are not the same you can do the following: Assume Source DBID = 10 Target DBID = 6. Detach your TestProduction database. Create a new database. This database will get DBID 6. Create 3 other Databases. The last created database will have DBID 9. Attach you TestProduction database. This will now get DBID 10.

- The default database for each login contained in the trace must be set (on the target) to the respective target database of the login. For example, the trace to be replayed contains activity for the login, Fred, in the database Fred_Db on the source. Therefore, on the target, the default database for the login, Fred, must be set to the database that matches Fred_Db (even if the database name is different). To set the default database of the login, use the sp_defaultdb system stored procedure.

- Create and start a SQL profile trace with a filter on the database ID of the restored production database on the test server. Save the results to a SQL Server database. This will be your baseline.

- To start the Replay, press the yellow arrow.

Step 5: Restore production database and configure some of the new features.

In the previous step we made the baseline. Now it is time to test the new features.

- Configure the new features you want to test.

- Load the captured profile data in the SQL Profiler

- Create and start a SQL profile trace with a filter on the database ID of the restored production database on the test server. Save the results to a SQL Server database in another table as you used for your baseline.

- Start the replay.

Step 6: Replay workload. Use SQL Profiler to measure CPU and IO.

Step 7: Compare the results with the baseline.

The results of the baseline and the first test are stored in 2 seperate tables. For instance: Table Baseline and Table Test1_Datacompression.

Use next query to compare the results:

SELECT 'Baseline' AS Test, COUNT(*) AS Queries,

SUM(CPU) AS CPU,SUM(READS) AS Reads,

SUM(Writes) AS Writes,SUM(Duration)/1000 AS Duration

FROM EOLSQL2008Replayresults.dbo.Baseline

WHERE EVENTCLASS in (10,12)

UNION ALL

SELECT 'Test1_Datacompression' AS Test, COUNT(*) AS Queries,

SUM(CPU) AS CPU, SUM(READS) AS Reads,

SUM(Writes) AS Writes, SUM(Duration)/1000 AS Duration

FROM EOLSQL2005Replayresults.dbo.Test1_Datacompression

WHERE EVENTCLASS in (10,12)

SUM(CPU) AS CPU,SUM(READS) AS Reads,

SUM(Writes) AS Writes,SUM(Duration)/1000 AS Duration

FROM EOLSQL2008Replayresults.dbo.Baseline

WHERE EVENTCLASS in (10,12)

UNION ALL

SELECT 'Test1_Datacompression' AS Test, COUNT(*) AS Queries,

SUM(CPU) AS CPU, SUM(READS) AS Reads,

SUM(Writes) AS Writes, SUM(Duration)/1000 AS Duration

FROM EOLSQL2005Replayresults.dbo.Test1_Datacompression

WHERE EVENTCLASS in (10,12)

The number of queries should be the same because you replayed the same workload on both databases.

Success with your benchmark.

Labels:

benchmark,

performance,

sql,

SQL 2008,

SQL 2008 R2,

sql profiler trace

Wednesday, August 11, 2010

Forced parameterization does not work for partly parameterized queries.

In my previous blog I described how you can recognize a forced parameterized query. If you have set the parameterization option to forced on database level, you can still find some queries which are not parameterized. In this blog I will describe why?

Please use the AdventureWorks database to use the scripts.

USE AdventureWorks

--Enable the forced parameterization on the database

ALTER DATABASE AdventureWorks SET PARAMETERIZATION FORCED

GO

-- Clear the procedure cache

DBCC FREEPROCCACHE

GO

-- Update 2 different records both with an different Title.

UPDATE HumanResources.Employee SET Title = 'Support' WHERE ContactID = 1002

GO

UPDATE HumanResources.Employee SET Title = 'xx1290Support' WHERE ContactID = 1290

GO

-- Look in the procedure cache.

SELECT text,execution_count

FROM sys.dm_exec_query_stats AS qs CROSS APPLY

sys.dm_exec_sql_text(sql_handle) CROSS APPLY

sys.dm_exec_text_query_plan(qs.plan_handle, qs.statement_start_offset, qs.statement_end_offset) AS qp

order by text

-- You will find 1 entry Title and ContactID are parameterized.

-- Clear procedure cache

DBCC FREEPROCCACHE

Same query but ContactId is parameterized. Title is not parameterized.

GO

exec sp_executeSql N'UPDATE HumanResources.Employee SET Title = ''Support'' WHERE ContactID = @P1' ,N'@P1 INT',@P1 = 1002

GO

exec sp_executeSql N'UPDATE HumanResources.Employee SET Title = ''xx1290Support'' WHERE ContactID = @P1' ,N'@P1 INT',@P1 = 1290

GO

-- Look in the procedure cache

SELECT text,execution_count

FROM sys.dm_exec_query_stats AS qs CROSS APPLY

sys.dm_exec_sql_text(sql_handle) CROSS APPLY

sys.dm_exec_text_query_plan(qs.plan_handle, qs.statement_start_offset, qs.statement_end_offset) AS qp

order by text

-- You will find 2 entries. Forced parameterization does not work.

-- Clear procedure cache

DBCC FREEPROCCACHE

-- Same query ContactID and Title are parameterized.

exec sp_executeSql N'UPDATE HumanResources.Employee SET Title = @P2 WHERE ContactID = @P1' ,N'@P1 INT, @P2 varchar(20)',@P1 = 1002, @P2 = 'Support'

GO

exec sp_executeSql N'UPDATE HumanResources.Employee SET Title = @P2 WHERE ContactID = @P1' ,N'@P1 INT, @P2 varchar(20)',@P1 = 1290, @P2 = 'xx1290Support'

GO

-- Look in the procedure cache

SELECT text,execution_count

FROM sys.dm_exec_query_stats AS qs CROSS APPLY

sys.dm_exec_sql_text(sql_handle) CROSS APPLY

sys.dm_exec_text_query_plan(qs.plan_handle, qs.statement_start_offset, qs.statement_end_offset) AS qp

order by text

-- You will find 1 entry because the query is already parameterized.

Conclusion: Forced parameterization does only work if the query does not contain any parameter. If at least one parameter is defined, forced parametization does not work anymore.

Enjoy the use of forced parameterization in the right context.

Please use the AdventureWorks database to use the scripts.

USE AdventureWorks

--Enable the forced parameterization on the database

ALTER DATABASE AdventureWorks SET PARAMETERIZATION FORCED

GO

-- Clear the procedure cache

DBCC FREEPROCCACHE

GO

-- Update 2 different records both with an different Title.

UPDATE HumanResources.Employee SET Title = 'Support' WHERE ContactID = 1002

GO

UPDATE HumanResources.Employee SET Title = 'xx1290Support' WHERE ContactID = 1290

GO

-- Look in the procedure cache.

SELECT text,execution_count

FROM sys.dm_exec_query_stats AS qs CROSS APPLY

sys.dm_exec_sql_text(sql_handle) CROSS APPLY

sys.dm_exec_text_query_plan(qs.plan_handle, qs.statement_start_offset, qs.statement_end_offset) AS qp

order by text

-- You will find 1 entry Title and ContactID are parameterized.

-- Clear procedure cache

DBCC FREEPROCCACHE

Same query but ContactId is parameterized. Title is not parameterized.

GO

exec sp_executeSql N'UPDATE HumanResources.Employee SET Title = ''Support'' WHERE ContactID = @P1' ,N'@P1 INT',@P1 = 1002

GO

exec sp_executeSql N'UPDATE HumanResources.Employee SET Title = ''xx1290Support'' WHERE ContactID = @P1' ,N'@P1 INT',@P1 = 1290

GO

-- Look in the procedure cache

SELECT text,execution_count

FROM sys.dm_exec_query_stats AS qs CROSS APPLY

sys.dm_exec_sql_text(sql_handle) CROSS APPLY

sys.dm_exec_text_query_plan(qs.plan_handle, qs.statement_start_offset, qs.statement_end_offset) AS qp

order by text

-- You will find 2 entries. Forced parameterization does not work.

-- Clear procedure cache

DBCC FREEPROCCACHE

-- Same query ContactID and Title are parameterized.

exec sp_executeSql N'UPDATE HumanResources.Employee SET Title = @P2 WHERE ContactID = @P1' ,N'@P1 INT, @P2 varchar(20)',@P1 = 1002, @P2 = 'Support'

GO

exec sp_executeSql N'UPDATE HumanResources.Employee SET Title = @P2 WHERE ContactID = @P1' ,N'@P1 INT, @P2 varchar(20)',@P1 = 1290, @P2 = 'xx1290Support'

GO

-- Look in the procedure cache

SELECT text,execution_count

FROM sys.dm_exec_query_stats AS qs CROSS APPLY

sys.dm_exec_sql_text(sql_handle) CROSS APPLY

sys.dm_exec_text_query_plan(qs.plan_handle, qs.statement_start_offset, qs.statement_end_offset) AS qp

order by text

-- You will find 1 entry because the query is already parameterized.

Conclusion: Forced parameterization does only work if the query does not contain any parameter. If at least one parameter is defined, forced parametization does not work anymore.

Enjoy the use of forced parameterization in the right context.

Tuesday, August 10, 2010

How to recognize forced parameterization queries in the procedure cache?

At the moment a query is started SQL Server will first analyze the query to find the best way to execute the query. This is called the execution plan. In the execution plan information is stored about for instance which index to use to retrieve the data. All execution plans are stored in the plan cache. If a new query is executed, SQL server will look if an execution plan is available for this query in the plan cache. If it is available it will be re-used. This will save the generation of the execution plan. For every execution plan SQL server counts how much time it is used. In your application you can parameterize your queries so SQL can re -use query plans. In case your application is not using parameterization, SQL Server can force parameterization on a database level. Analyzing the procedure cache can help you in optimizing the performance of your application. This blogpost will explain how you can recognize forced parameterized queries in the procedure cache.

Next example queries can be used on the AdventureWorks demo database. To download the AdventureWorks demo database click here.

First of all we will not use force paramerization:

ALTER DATABASE AdventureWorks SET PARAMETERIZATION SIMPLE

Execute next example of a parameterized query:

exec sp_executeSql N'Select * from HumanResources.Employee where ContactID = @P1' ,N'@P1 INT',@P1 = 1290

GO

Execute the same parameterized query for another value (1002)

exec sp_executeSql N'Select * from HumanResources.Employee where ContactID = @P1' ,N'@P1 INT',@P1 = 1002

GO

Now we can look in the procedure cache:

SELECT text,execution_count FROM sys.dm_exec_query_stats AS qs CROSS APPLY

sys.dm_exec_sql_text(sql_handle) CROSS APPLY

sys.dm_exec_text_query_plan(qs.plan_handle, qs.statement_start_offset, qs.statement_end_offset) AS qp

order by text

You will get one row with an execution count of 2. Because the query uses a parameter for ContactID, the execution plan is available when the query for parameter value 1002 is executed.

The parameterized query can be recognized on the ContactID=@P1 (Remember the P)

If we do not use parameterization we have next 2 queries:

SELECT * FROM HumanResources.Employee WHERE ContactID = 1290

GO

SELECT * FROM HumanResources.Employee WHERE ContactID = 1002

The procedure cache will have 2 entries. One for every query.

Now we enable forced parameterization.

ALTER DATABASE AdventureWorks SET PARAMETERIZATION FORCED

Execute a non parameterized queries:

SELECT * FROM HumanResources.Employee WHERE ContactID = 1290

go

SELECT * FROM HumanResources.Employee WHERE ContactID = 1002

The procedure cache will have one result set:

The forced parameterization can be recognized on the

ContactID = @0. (So without the P)

Enjoy analyzing your procedure cache to further improve the performance of your application.

Photo Credit: Suchitra Prints

Next example queries can be used on the AdventureWorks demo database. To download the AdventureWorks demo database click here.

First of all we will not use force paramerization:

ALTER DATABASE AdventureWorks SET PARAMETERIZATION SIMPLE

Execute next example of a parameterized query:

exec sp_executeSql N'Select * from HumanResources.Employee where ContactID = @P1' ,N'@P1 INT',@P1 = 1290

GO

Execute the same parameterized query for another value (1002)

exec sp_executeSql N'Select * from HumanResources.Employee where ContactID = @P1' ,N'@P1 INT',@P1 = 1002

GO

Now we can look in the procedure cache:

SELECT text,execution_count FROM sys.dm_exec_query_stats AS qs CROSS APPLY

sys.dm_exec_sql_text(sql_handle) CROSS APPLY

sys.dm_exec_text_query_plan(qs.plan_handle, qs.statement_start_offset, qs.statement_end_offset) AS qp

order by text

You will get one row with an execution count of 2. Because the query uses a parameter for ContactID, the execution plan is available when the query for parameter value 1002 is executed.

The parameterized query can be recognized on the ContactID=@P1 (Remember the P)

If we do not use parameterization we have next 2 queries:

SELECT * FROM HumanResources.Employee WHERE ContactID = 1290

GO

SELECT * FROM HumanResources.Employee WHERE ContactID = 1002

The procedure cache will have 2 entries. One for every query.

Now we enable forced parameterization.

ALTER DATABASE AdventureWorks SET PARAMETERIZATION FORCED

Execute a non parameterized queries:

SELECT * FROM HumanResources.Employee WHERE ContactID = 1290

go

SELECT * FROM HumanResources.Employee WHERE ContactID = 1002

The procedure cache will have one result set:

The forced parameterization can be recognized on the

ContactID = @0. (So without the P)

Enjoy analyzing your procedure cache to further improve the performance of your application.

Photo Credit: Suchitra Prints

Sunday, August 8, 2010

Whitepaper: High Performance SQL Server Workloads on Hyper -V

Enjoy reading: Whitepaper: High Performance SQL Server Workloads on Hyper -V

Wednesday, July 28, 2010

Overview performance improvements in Exact Globe in the last years.

Photo credit Alancleaver_2000

Photo credit Alancleaver_2000This blog post will give an overview of all performance improvements and cleanup functions.

- Clean up log files. Release 360. Functionality is made to clean up ‘Application log’ or ‘Masterdata log’

- Clean up of historical journal records. Release 360. Historical data in Globe is stored in multiple tables in the database. In the previous release of Globe application, there is no process in place to perform the clearing of old records. As the database size grows from period to period and from year to year, the system performance will be deteriorated

- Database structure optimized. Release 370. Exact Globe 2003 database used data type CHAR instead of VARCHAR to store data with length more than 10 bytes. CHAR always uses fixed length to store data whereas VARCHAR uses dynamic length which depends on the actual size of the data. Therefore from a database structure standpoint, the database was not optimized. As a result, the following drawbacks were introduced. Record was unnecessarily bigger which caused slower queries because less record can be retrieved in one disk I/O. Indexes were bigger which again reduced query performance

- Clean Up XML Import Logs. Product update 395. Whenever you import XML files to Exact Globe, the XML import results which are the log of the relevant events will be recorded. An option to remove the XML logs are added to the functionality for cleaning up logs.

- Cleanup tool to delete obsolete logistic records and MRP planning records. Product update 395. Logistic processes in Exact Globe generate MRP planning records. Examples of logistic processes are sales orders, blanket sales orders, return to merchant authorization (RMA) orders, purchase orders, blanket purchase orders, return to vendor (RTV) orders, interbranch transfers, and quotations. Over the years, as customers’ databases become larger with logistic transactions, the database actions on these records become slower. Historical records such as completed sales or purchase orders may be considered obsolete after the completion of the logistic processes over a certain period of time. In order to enhance the performance of the administration, these obsolete logistics and planning records are best deleted.

- Retrieving Balance Totals. Product update 395. Due to the database structure of Exact Globe, the retrieval of transaction totals requires the system to totalize all transactions. Over time, as your database becomes bigger and filled with transactions, the retrieval of balance totals becomes slower. Faster retrievals are therefore necessary to help you work more efficiently with the system. Enhancements have been made to improve the performance of retrieving balance totals

- Database Performance by Optimizing Index. Product update 397. In every Exact Globe product update, system performance is improved by optimizing the database indices in Exact Globe. In this product update, a tool is implemented to optimize your database instead of the standard indices in Exact Globe. With this method, the indices will be deployed only when it is required by your SQL server. This method also reduces deployment risk because you can easily add or remove the indices if you are not satisfied with the performance result. There will also be performance improvement for functions that use the new index.

- Cleanup tool to delete obsolete logistics records extended to Production Orders and Stock Allocations.Product update 398. The scope of the cleaning up tool is extended to completed production orders and all the respective allocation entries. The allocation entries refer to stock allocation records generated from back-to-back orders and sales order enrichments. Consequently, the absence of production orders and stock allocation is no longer the prerequisite for executing the tool.

Labels:

cleanup,

delete,

Exact Globe,

Globe,

improvements,

performance

Tuesday, July 27, 2010

Performance improvement Globe (398): Logistic MRP Cleanup Tool Extended to Production Orders and stock allocations.

Photo Credit: Donger

Photo Credit: DongerIn Exact Globe product update 395, the logistics MRP cleanup tool for cleaning up obsolete logistic records and budget entries without affecting the related processes was introduced. The records included completed sales orders, blanket sales orders, RMA orders, purchase orders, blanket purchase orders, RTV orders, interbranch transfer requests, and quotations. For more information, see Product Update 395: Improved Performance with Obsolete Logistic Records and MRP Planning Records Cleanup Tool .

However as of product update 398, in order to enhance the performance of the administration, system administrators also need to delete the obsolete logistics and planning records for production orders and allocation entries. As such, in this implementation, the scope of the cleaning up tool is extended to completed production orders and all the respective allocation entries. The allocation entries refer to stock allocation records generated from back-to-back orders and sales order enrichments. Consequently, the absence of production orders and stock allocation is no longer the prerequisite for executing the tool. This enhancement will definitely assist administrators in boosting the performance of the administration with ease. For more information about the updated prerequisites, how to use the cleanup tool, and the latest list of functionalities that are affected by the cleaning up process, see How-to: Cleaning Up Obsolete Logistic Records and Budget Entries.

To get an estimate how much records can be cleaned up, please run the Exact System Information tool and request an improvement report. More information about the improvement reports of the Exact System Information tool can be found here.

Enjoy the update to Globe product update 398.

Exact Globe supports SQL Server 2008 R2 as of product update 398

In July 2010 the Exact Globe product update 398 is released. As of this product update 398, Microsoft SQL Server 2008 R2 is supported. Beside this Office 2010 (32 bits) is supported. More information about this product update can be found here.

Be aware that a database which is upgraded to SQL 2008 R2 can't be used on a previous version of SQL Server.

Enjoy your upgrade to SQL 2008 R2.

Labels:

Exact Globe,

SQL 2008 R2,

support

Sunday, July 25, 2010

Back from holiday, new blog posts will come soon.

The last 3 weeks I have been on holiday. It was fantastic, good food, wine, relaxed a lot. We did a lot of nice activities with the children like swimming, playing tennis and golf, drive karts, climbing in trees and one of my favorites during holidays: fishing on carps.

Now the holiday is over, I will continue to post new articles about SQL Server. See you soon.

Now the holiday is over, I will continue to post new articles about SQL Server. See you soon.

Tuesday, July 20, 2010

How to retrieve numbers of records in a table with high performance without index or tablescans?

Photo Credit: dodge challenger1

Photo Credit: dodge challenger1The most common way to retrieve the number of records in table is done with a query like:

select count(*) from tableX where column1 = 'value'

Using this kind of queries will result in a (clustered) index scan. On tables with millions of records this can still take a while to execute and can result in unnecessary overhead on the SQL server. SQL server stores a lot of statistics of your tables. These statistics are used by the optimizer to generate the most efficient queryplan. You can use these statistics to retrieve the number of records in your database. This is always faster then to query the table itself.

SQL server uses his own mechanisme to update the statistics, so it can happen that there is a small mismatch between the number of records in a table and the number of records stored in the statistics. For big tables this is not always so important. For instance to display the growth of your tables.

You can use next query to retrieve the number of records per tables via the statistics:

SELECT sysobjects.name,rowcnt

FROM sysobjects

INNER JOIN sysindexes ON sysobjects.id=sysindexes.id

WHERE xtype='U' AND indid IN (0,1)

AND sysobjects.name = 'Your table'

To check for the number of records for a column with a specific value use next query. This query wil return the number of records per column value. Available columns are columns which exists as the first column in an index. Example you have a table: X with the column: Type. The column type is used as the first column in an index. Next query will return all existing values for the column type with the number of records.

DECLARE @TableName Nvarchar(200)

SET @TableName = 'Your Table'

DECLARE ColumnStatistics CURSOR FOR

SELECT Tbl.name AS TableName, SSID.name as IndexName ,

INDEX_COL( tbl.[name], idk.indid, 1 ) AS IndexColumn

FROM SYSINDEXkeys idk

INNER JOIN SYSOBJECTS tbl ON idk.[id] = tbl.[id]

INNER JOIN SYSINDEXES SSID on SSID.[ID] = TBL.[id] and ssid.indid = IDK.indid

WHERE tbl.ID = (SELECT ID FROM SYSOBJECTS WHERE name = @Tablename)

AND keyno = 1

OPEN ColumnStatistics

DECLARE @IndexName Nvarchar(200)

DECLARE @IndexColumn Nvarchar(200)

FETCH NEXT FROM ColumnStatistics INTO @TableName,@IndexName, @IndexColumn

WHILE @@FETCH_STATUS = 0

BEGIN

CREATE TABLE #TempTable (ColumnValue nvarchar(200),INT1 INT,EQ_ROWS INT,

INT3 INT,INT4 INT,TableName nvarchar(200))

INSERT INTO #TempTable (ColumnValue, int1,EQ_ROWS,int3,int4)

EXEC ('dbcc show_statistics(' +@TableName+','+@IndexName+') with histogram')